As readily-available Large Language Model (LLM) platforms like ChatGPT, Bard AI, Mistral, and Bing AI become more prevalent, business leaders are increasingly exploring the potential of Generative AI (Gen AI) technologies to transform their business operations. For instance, according to one study, 78% of organizations plan to invest in AI platforms over the next 12 months. However, business owners need reassurance they’re getting the most out of what this tech has to offer. LLMs, while…

Here at Stratio, we’re proud to announce that we’ve joined forces with global consulting powerhouse Stratence Partners to help businesses…

Leading Generative AI Data Fabric specialist Stratio BD today announces it has been included in Gartner’s highly vaunted Data Integration…

AI has become a hot-button topic over the past year, set to transform a swathe of industries, including the world…

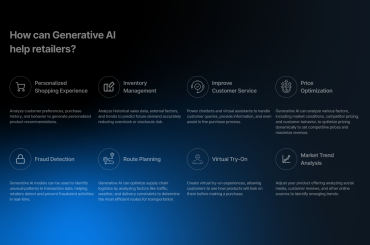

Introduction In the highly competitive retail industry, data is, once again, queen. Decisions are made quicker, options are too diverse,…

Introduction Companies must switch to automation to reduce operating costs. This is imperative for their survival and thriving in an…

With Stratio Generative AI Data Fabric now available on AWS Marketplace, enterprises worldwide can more easily access the most innovative…